3D Debugging - Debugging in der virtuellen Realität

In der Vorlesung „Mixed Reality Lab„, haben Laura Ködel, Sven-Tizian Mauer, Bhanu chandan Machenahalli und Ich uns es als Aufgabe gesetzt, das Debugging in den virtuellen Raum zu bringen. Ziel war es, herauszufinden, ob der zusätzlich verfügbare Platz in der virtuellen Realität zu einem besseren Verständnis des zu behebenden Problem beitragen kann.

Das Ergebnis dieser Vorlesung war ein Paper, dass Sie unterhalb finden können.

Dieses Paper wurde geschrieben von: Sven-Tizian Mauer, Laura Ködel, Lukas Ertl and Bhanu chandan Machenahalli

Abstract— This paper defines the concept of Virtual 3D debugging motivated by mental model research in information studies, and Cognitive design elements to support the construction of a mental model during software exploration and presents the findings of an exploratory study of the mental model of an academic information system. People create mental models of the systems and processes they engage with as part of the process of making sense of their experiences.

The lack of fundamental information about the program execution process is also a barrier to software engineering education and training. Debugging the code using traditional approaches proves to be difficult most of the time. As systems develop in complexity, it becomes more difficult for a person unfamiliar with source code to comprehend the mainly unseen structure. Historically, it has been difficult to visualize program architecture.

Individuals construct mental models of the systems and processes with which they engage as part of the process of making sense of their experiences. In this project, we have designed a virtual 3D environment in which programmers can walk through the code and virtually view and understand it thus considerably enhancing the debugging process.

Keywords – unity, 3d Debugging, Virtual reality, Debugging, Programming

I. Introduction

“Our reasoning processes – learning, understanding, problem solving – are largely dependent on our mental modelling mechanisms. … A model is not a merely simplified version of a certain reality. The main characteristic of a model is that it is a reality of its own. … Being structurally unitary and autonomous, the model very often imposes its constraints on the original and not vice versa!„ [1]

The concept of sense-making is one of the most prevalent in contemporary research on human-information interaction. The idea of sense-making holds that the human experience is, in part, an intentional, socially anchored process of producing sense or meaning out of informational issues, discontinuities, and disconnections that we all deal with on a daily basis in both our personal and professional lives [2].

Diverse levels of comprehension of the systems or processes from which users are expected to get information can be seen in their mental models. These models could be limited; they might merely represent analogies, which frequently function rather effectively. People adopt mental models that are partial and/or inaccurate yet nonetheless work with varying degrees of effectiveness and efficiency. In a kind of „ignorance is bliss“ approach, Mental Model just [1].

Programmers devote a significant amount of their time to debugging their systems. Using commands like step, continue, and goto, the programmer may set breakpoints, check storage, and control execution. The programmer then studies the execution of these instructions in detail, trying to figure out where the program deviates from the expected behavior and why.

There are a number of reasons why debugging is still difficult. Debugging is also getting more difficult as programs become more complicated. In order to uncover interesting future directions for virtual reality debugging assistance, this research examines the potential for various techniques of displaying code and managing the flow in a virtual environment.

II. Theoretical background

The sensemaking paradigm is one of the most influential concepts in current human–information interaction research. The concept of sense-making proposes that the human experience is in part an active, socially embedded process of making sense of information issues, discontinuities, and disconnections that we encounter on a regular basis in our personal and professional lives [2].

Kenneth Craik characterized MMs as „small scale models“ [4] of reality in 1943, and this was the beginning of their contemporary history. People use their models to infer linkages, anticipate outcomes, comprehend the systems they interact with, decide a course of action, regulate that action, and experience events ‚by proxy‘ [3], according to cognitive psychologist Philip Johnson-approach Laird to mental model’s. This reason-centered method contrasts with D. Norman’s physically centered approach, which depicted mental models as constrained internal diagrams that only apply to physical activities like moving pulleys [5] around the same time [1].

Many scholars have used observation and experiments to study how programmers comprehend programs. As a result of this research, numerous cognitive theories to characterize the understanding process have been developed. Although the cognitive theories differ in style and content, many aspects and concepts that characterize fundamental actions in program comprehension are shared by them.

Many cognitive models have been presented during the last 20 years to describe how programmers interpret code throughout software maintenance and evolution. Debugging can be comprehensive when a programmer can build a mental model that correlates to anything in the actual world [2]. This motivated us to create a 3D model that aids comprehension by integrating mental models and including temporal visualization.

Exaggerated or severe assertions regarding the importance or nature of mental models have occasionally prevented a more in-depth analysis of their function in information seeking. Mental models can aid in understanding user behavior when considered as one significant aspect of sense-making rather than as the exclusive basis for system design. They are no more difficult in their application than any other internalized human phenomena [1]. Although MMs are applied to certain situations by the person who invented them, each MM has a different nature. Models often contain a considerable deal of what is accurate since they are developed based on what has worked in practice; yet, because persons who construct these MMs seldom actively review them for defects, they may not include notice of what is incorrect. The complexity of the job and, often, the performance, increase as the number of models needed for a task increases. However, the enigma of the individual mind is where the main challenge in using mental model theory to enhance information system design resides. By utilizing interviews, observation, and think-aloud procedures, the grounded-theory, sense-making approach of communication and information studies reduces this challenge [1].

A user’s mental representation of the program to be understood is described by a mental model. A cognitive model explains the mental model’s cognitive processes and informational framework. One of the important cognitive model in [2] is as below.

Bottom-up theories of comprehension, which imply that comprehension is developed bottom-up by reading the source code and then cognitively segmenting or organizing these statements into more abstract ideas. Programmers first create a low-level control abstraction of the program that captures the program’s sequence of operations before creating a model that incorporates knowledge of the data flow.

We created a 3D virtual environment where programmers can view the source code, blocks for each function and a stack of blocks for each Step Into. The ability to virtually navigate the code allows the programmers to better grasp what is happening while they do so.

III. Cognitive Design Elements

According to Rebecca Kim Cognitive design is the impact a piece of writing has on its audience. These elements are ways of understanding and emphasizing the subject and theme. These elements are structure, character, location, topology, rhetoric and perspective [7].

The author here focused mostly on the cognitive factors to be taken into account when authoring the book for a better understanding of a reader, helped us to create and consider design elements while creating a virtual 3D environment to help programmers to debug the code while easily understanding it.

By drawing inspiration from the program-comprehension method [2], we used a bottom-up philosophy to improve program understanding while debugging.

Bottom-up comprehension requires reading program statements and structures and dissecting them into higher-level abstractions until a thorough understanding of the program is gained. The three main tasks involved in bottom-up understanding are identifying software objects and the relationships among them, examining code in delocalized plans, and producing abstractions (by chunking from lower-level units). A comprehension tool that supports bottom-up understanding tries to address these important objectives [2].

The fundamental components of the program, such as the code or visual representations of them, should be accessible immediately while starting to use the 3D environment.

It is necessary to visualize software objects and the relationship between them.

IV. Current work

Storey, Fracchia, and Mueller [2] describe in their paper several cognitive design elements to assist in building a mental model for software exploration. Some of these design elements were considered in the implementation of this project. They are intended to provide the user with the most intuitive user interface possible and to assist in the process of understanding. Below is a list of the design elements considered in this project:

Reduce Disorientation:

When navigating larger programs or trying to solve more complex problems, disorientation can easily occur. In order for the user to focus 100% on solving the problem, it is essential to prevent this disorientation. Storey, Fracchia and Müller wrote: „Disorientation can be alleviated by removing some of the unnecessary cognitive overhead resulting from poorly designed user interfaces and by using specialized graphical views for presenting large amounts of information“[2].

This is exactly what we did for our project. Our 3D debugger is based on the Google Chrome Debugger. However, for the presentation in virtual reality we do not take over all control elements of the Chrome Debugger. This reduction of elements prevents the user from being overwhelmed and ensures that the user can fully concentrate on the actual problem. The two main elements that have been taken over from the Chrome Debugger into the virtual space are the code window, the call stack and the Step Into function. Here the Step Into function is not displayed as a graphical button but is placed on a physical button on the VR controller.

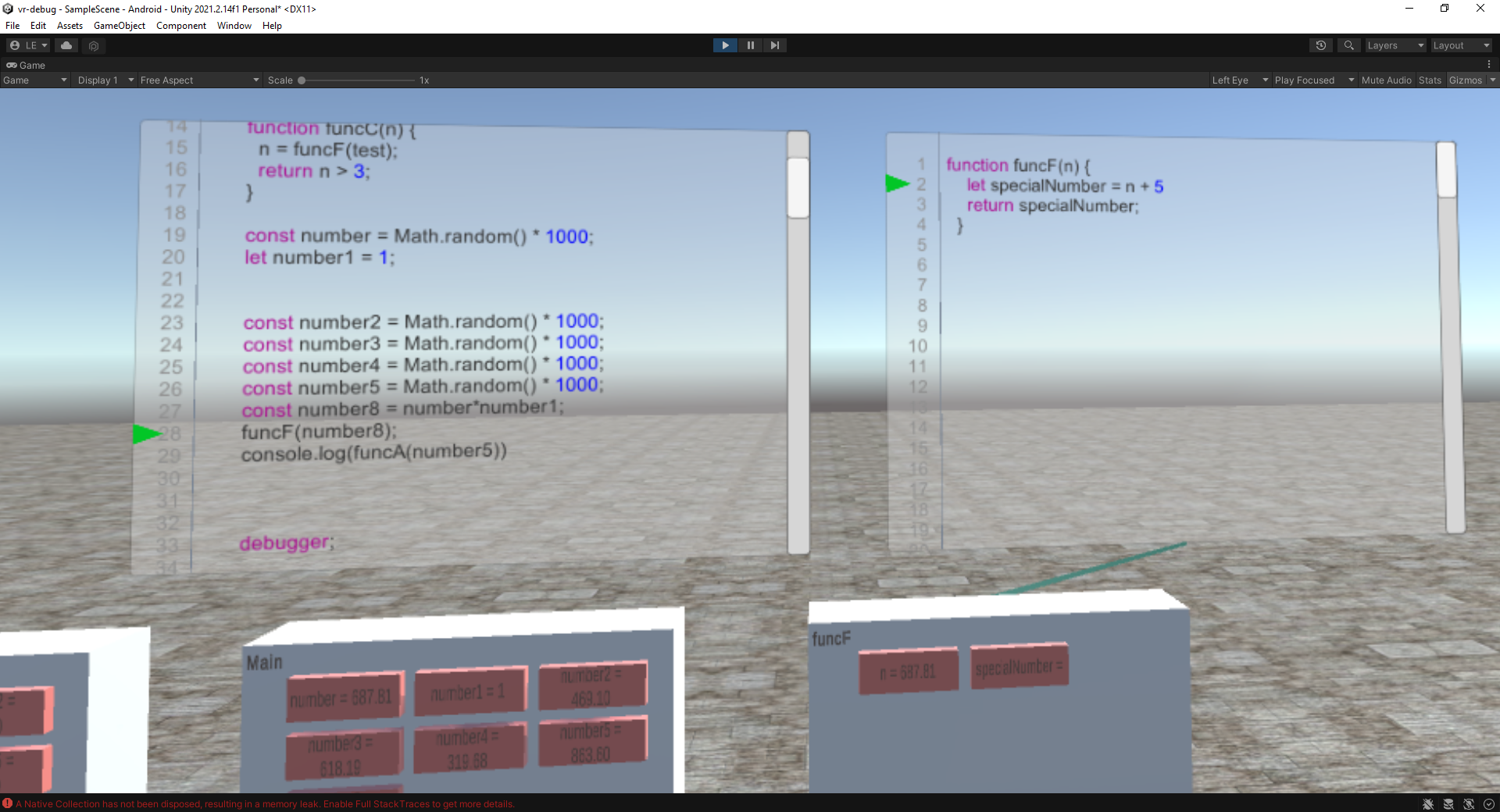

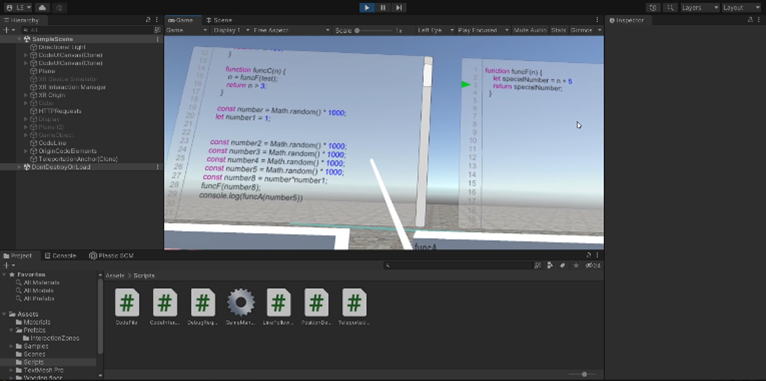

Figure 1 Screenshot 3D Debugger showing the Code Windows and the Call Stack

Code Window:

The code window floats in three-dimensional space in front of the user and allows the player to inspect the code relevant to the current debug process. Similar to traditional debuggers, the current line of code is highlighted with a green arrow on the side of the window. For a better overview, programming language specific keywords are highlighted, and the code lines are numbered. If the code to be debugged is spread over several files, there are several instances of the code window lined up next to each other. A colored line between call stack and code window indicates in which file the debugger is currently working. This line is another approach to prevent user disorientation.

Call Stack:

In addition to the code windows, the current call stack is also displayed to the user. Here the called functions are listed from bottom to top. A function is graphically represented here as a three-dimensional cube. On the top left of the cube the name of the function is displayed and on the rest of the cube the variables of the function are displayed in the form of red boxes. These red boxes contain the name and the currently assigned value of the variable.

Show the path that led to the current focus:

To help the user understand complex problems, it is useful to store and visualize small pieces of temporary information, such as the path that led to the current focus. On the World Wide Web, this design element is often represented in the form of breadcrumbs, which make it clear to the user which path he or she has already taken and how it led to the current focus [2]. In this project, the path the user has already taken is represented in the form of a timeline.

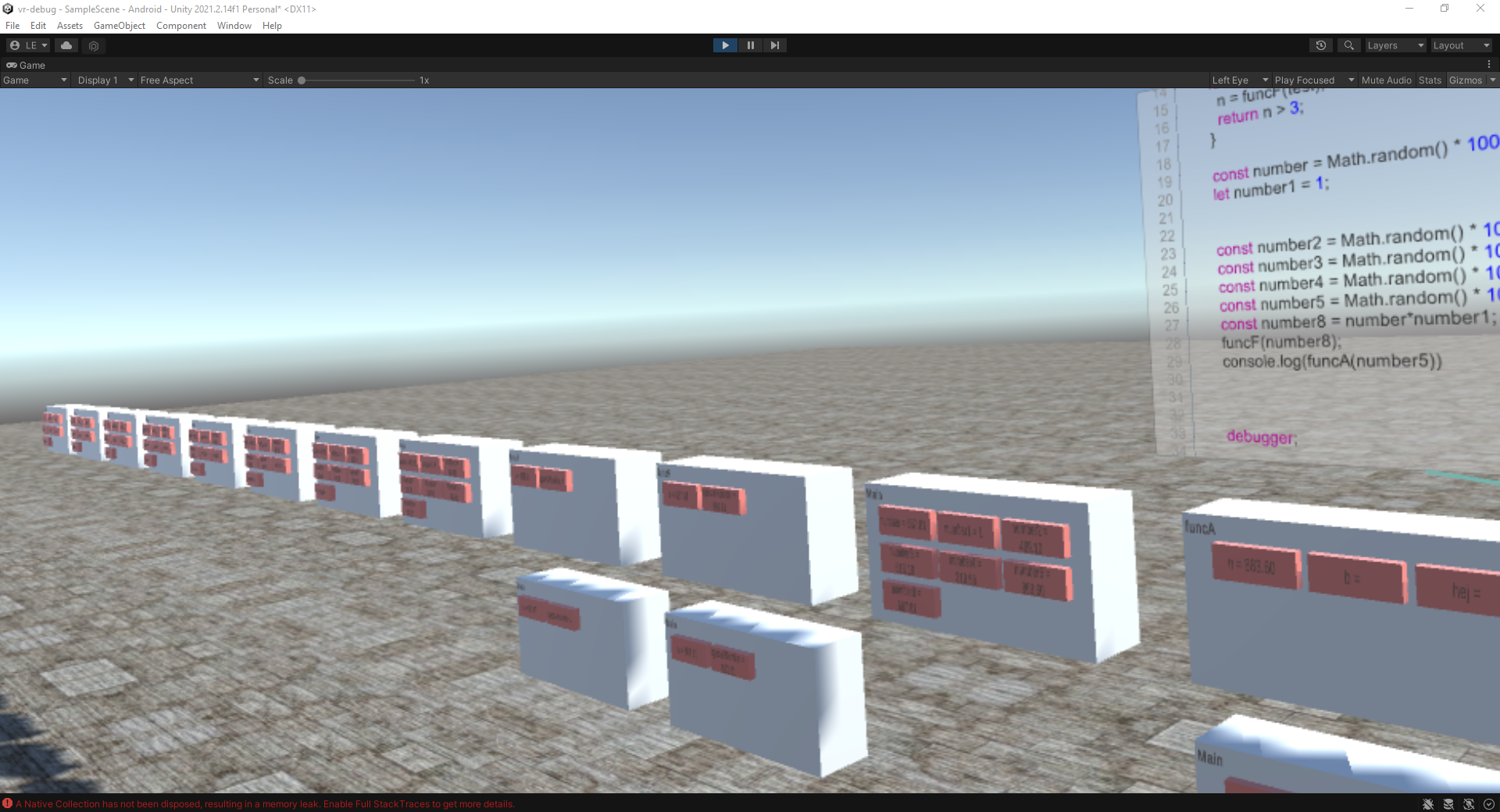

Figure 2 3D Debugging Timeline

This timeline is formed by different call stacks that represent the current program state of different points in time in the past. Since this takes place in three-dimensional space, the user can, if necessary, simply turn his head to the left or even walk to the desired location to explore the program path that has been taken.

Navigation:

In order to provide the user with the most intuitive navigation possible within the system, we use interaction capabilities such as, movement in the physical world to interact with the system. Movements, such as walking in a room or moving the hands, is transferred to the virtual reality. This allows the user, by walking in the physical world, to change the position within the application, and thus, for example, to be able to populate the timeline more accurately, which assists in understanding the problem to be solved. If the user walks in the timeline at a past point in time, he sees the current call stack at that time with the variables set at that time and their assigned values. Furthermore, the code windows follow the user along the timeline. These also adapt to the targeted time in the past and visualize which file was in focus at that time and in which code line the user was. In this way, the user can examine his past steps, as in a kind of time travel, and thus better understand the current program status.

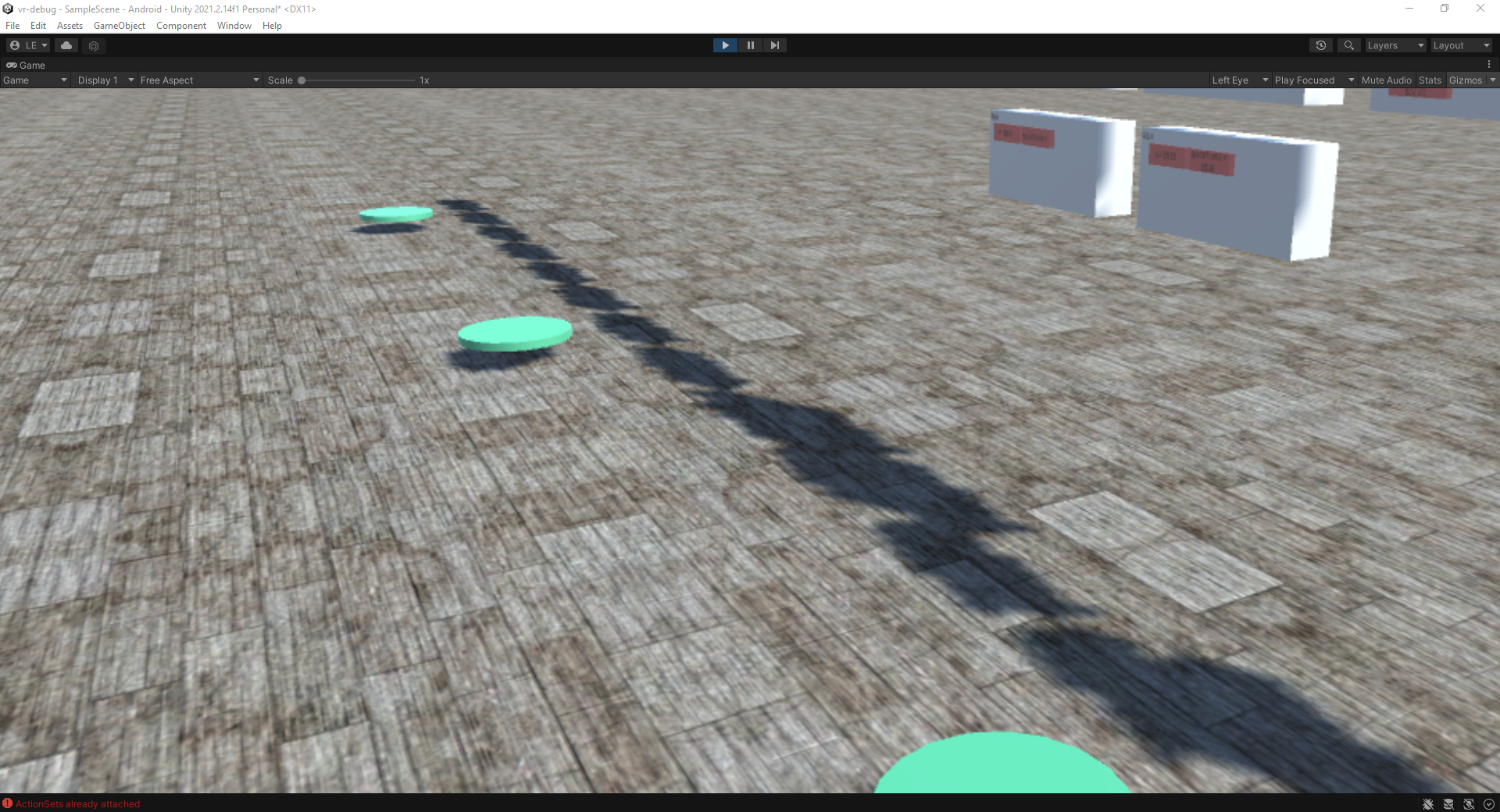

Figure 3 Screenshot 3D Debugging Teleportation platforms

Since users are likely to use the application indoors, and thus also have limited movement options, the use of teleportation was resorted to in addition to physical movement. If the desired time point in the timeline is too far away to walk to, the user can teleport to the desired location using the controller. Since this project is also intended to promote the movement of a user while working, the options for teleportation were limited. All five debug steps are located on the floor teleportation platforms, which allows the user to teleport to that location by selecting it with the help of a controller. If this is not exactly the desired location, the user can walk from this point to desired location without getting space problems in the physical world.

Indicate the maintainers current focus:

Orientation cues give the user an orientation where they are currently in the code and the ability of switching the focus in the code [2]. The user’s focus is enhanced in two ways. Firstly, an arrow marks the position of the active step in the code window. Secondly, the current focus of the user within the call stack on the timeline is indicated by the user´s position. When the user walks around, the focus changes according to the position in relation to the call stack block of the call stack timeline that is in front of the user, as well as an updated code window position and call stack to code windows line.

V. Technical Implementation

Technical Equipment:

To use the system, users need VR glasses including a controller. It is important that the VR glasses support the tracking of the physical world. This is important so that movements, such as walking in the physical world, can be interpreted by the system, and transferred to the virtual world.

Figure 4 Photo of a participant of the User study using the VR goggles and controllers.

Frontend Implementation:

Unity is used as the frontend technology. Unity is a real-time 3D engine with an integrated development environment that is typically used for game development. In addition to the possibility of creating conventional 3D applications, it also offers the possibility of creating VR applications. Advantages of this 3D engine are, among other things, the large number of documentations and tutorials and the pricing model of the engine. The use of the system is free up to a certain amount of money earned by the project or game created by. Another advantage is the good interaction of the engine with different target platforms. The engine inherently offers the possibility to export the created application to different target platforms. Among them is also the VR setup used in this project. The 3D engine offers the possibility to use the VR glasses, through a connection with the computer during the development process. Unity also offers the possibility to export the application and install it on the VR glasses, so that the application can be used without an additional computer.

Figure 5 Screenshot of the Unity 3D engine during development

This project was programmed in Unity using the C# programming language. For this, Unity provides an interface that allows interacting with the engine. The code developed in Unity mainly takes care of visual things, such as displaying the code in 3D space, interpreting the user’s movements, or providing various interaction options for the user. Besides visual things, the frontend code also takes care of things like storing the debug history or communicating with the backend part of this project.

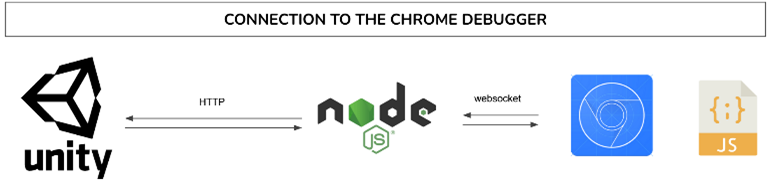

Backend Implementation:

For necessary functions of a debugger, such as step-into, information about variables or information about occurring errors, no own debugger was developed, but relied on an already implemented debugger. In this project the Google Chrome Debugger was used. For this an API is available, which makes it possible to interact with the debugger and to get information about the debug process. The Google Chrome Debugger is sufficient for debugging JavaScript code. Since this project is based on this debugger, only debugging of JavaScript code is currently possible in the 3D debugger. To make the Google Chrome Debugger API available, the Chrome browser must be started via command line with an extra parameter. Afterwards, the web page or file on which the code to be debugged is located must be called and the Chrome Debugger must be opened. Now a WebSocket interface is available to interact with via a WebSocket connection.

Figure 6 Visualization of mapping the WebSocket API into an http API

To avoid having to maintain a persistent WebSocket connection via the VR glasses, an additional server application was developed that converts the WebSocket interface to an http interface. This application was implemented in NodeJS. It connects to the Chrome Debugger at the beginning of the debug process, waits for requests via its http interface and converts the sent data into the required format. This way it is possible to send commands to the debugger and receive data from the Unity application without having to maintain a constant connection.

VI. User Study

For testing the applications prototype and derive recommendations for further actions, a small user study with 6 participants was conducted. Of course, the results are not representative as the number of participants as well as their prerequisites were limited to a small scope and not very diverse. Nevertheless, it gave a first impression about the acceptance of 3D-debugging as well as the feeling and usability of the prototype.

The participants of the study were required to already have some programming and debugging experience, but it was not necessary for them to have previous experience with virtual reality applications. To prepare the study, a test program was written which had to be debugged by the participants. The preparation also included the compilation of a small set of questions that could be answered during or after the execution of the test program.

After the recruiting process was done and some participants were found, the procedure was explained to them. With some guidance offered by the interviewers, all participants managed to debug through the test program and finally find the cause of error and test out all the functionalities the prototype offers. Thereby it could be determined that participants with prior experience in virtual reality applications managed to familiarize themselves more quickly with the test environment and resultingly needed less guidance.

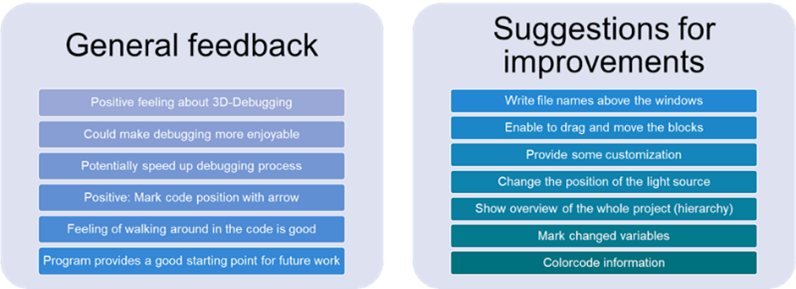

The main cause of the user study was to get some general feedback about 3D-debugging and the application as well as to collect suggestions for improvements for the tested work. Therefore, some of the questions the participants had to answer included topics like the opinion and general feeling about 3D-debugging, the impression of the design, layout, and visualization of information within the prototypes’ user interface as well as the intuition of the navigation and the overall impression of the application.

Generally, even if none of the participants ever tried 3D-debugging before, the overall impression of this approach was positive. Through the possibilities offered by using the three-dimensional space and other benefits within the virtual reality, debugging could be made more interesting and enjoyable. This seems to be less significant as it is a non-functional advantage with no measurable result but considering software developers spending most of their time debugging programs, making this task more enjoyable is a desirable goal. Building on this, 3D-debugging could potentially speed up the debugging process. Conclusively, all participants agreed on the statement that they can imagine using a more advanced 3D-debugging application in their future working environment.

Regarding the current state of the tested application itself, the participants emphasized the navigation positively. The feeling of walking around in the code along a timeline highly supports program comprehension, the added teleportation functionality encourages fast navigation over longer distances so the application can also be used when only limited space is available in the physical environment. Furthermore, highlighting of the current step in focus inside the overall context was pointed out being positive for the users. They especially mentioned the arrow as marker for the current code position as well as the line connecting the call stack information to the code file. To summarize the impressions of the tested application, all participants were unanimous that it provides a valid starting point for further research and development of 3D-debugging.

As mentioned, the tested application provides a starting point and is therefore still limited in its functionalities. Resultingly, some suggestions for improvement of the application were collected during the user study as well. Regarding the code windows in the background of the visualization, file names could be displayed for better orientation. In the foreground, where the call stacks are located, they suggested to mark the variables which have changed from the previous to the current step. Feedback to the overall application included to show the hierarchy of the whole project. This function especially comes into effect when debugging larger projects. Furthermore, the participants wanted to drag and move the blocks around. When implementing this feature, attention must be paid to the arrangement of the code blocks on the timeline as this can result in confusion and unstructured visualization quickly. Generally, it was suggested to provide some color coding for several, important information to highlight the crucial content or changes to support program comprehension. Building on this, more customizations could be offered in general so each user can influence the design and representation of information in a way that suits best. For increasing the contrast and readability of the visualized items, one participant suggested to change the position of the light source within Unity. This leads to a movement of the shadows and visible changes in the overall illumination of the application.

For summarization, the described results of the user study are pointed out in the following figure.

Figure 7 List of general feedback and suggestions for improvement collected as part of the user study.

VII. Future Work

As the prototype provides basic functionalities to give an impression of 3D-debugging, the user study pointed out that continuing this work might result in a product that will be accepted by software developers. Combining the results of the user study together with our own experiences during the process of developing and testing the 3D-debugging tool, some ideas for future work could be derived.

As an additional feature it would be helpful to be able to restart the debug process from the beginning within the application, which is not possible yet.

Aside from this, some general ideas for improving the debugging experience were formulated. For example, user-defined backgrounds could be provided. Having this, users of 3D-debugging would be able to debug wherever they like, maybe at the beach or in the forest. Furthermore, personalization of the content is another important area of improving the user experience. Users want to be able to control which colours are used or which size the code blocks and writing are. Moreover, it can be thought about providing different layouts for the organization of the different elements within the visualization. This would provide more freedom to the user through fulfilling the need for personalization as well as for moving the blocks around, which was pointed out during the user study. Nonetheless this approach keeps the elements in a fixed order avoiding the risk of confusion as the call stack blocks still are attached to the timeline.

To make 3D debugging applicable in the work life of professional software developers, the visualization must be able to display the contents of a larger code base in an understandable and accessible way. Therefore, a concept must be developed consisting of different design elements to provide the relevant information. The user must be able to keep orientation within the program all the time and furthermore must keep track of the changes that happened during the previous debugging steps. Presenting a large program in a comprehensive way will be one of the major challenges when conceptualizing 3D debugging for usage in the daily life of software developers. Here, the topic of customization comes into action again. To avoid overwhelming the user with all the information, it must be possible to hide parts of the content so that the user can expand and view them if needed. Because everyone has a different strategy of program comprehension, it is important to focus on the overview and overall context at first and provide deeper, more detailed information when it is required. This can be done with design elements for visualization and navigation as well as by using colours and textual or even acoustical descriptions. Ideas like that are accompanied by having a more interactive solution where the user has more possibilities as well as more room for making decisions as it is provided in the current prototype version.

As being said, the prototype version of 3D-debugging opens up a wide space of possibilities for ongoing work. Especially when the requirements for displaying larger programs alongside with usability and customizability are regarded during further development, 3D-debugging could greatly improve the debugging experience of software developers.

VIII. Conclusion

In this project, we have demonstrated how a 3D virtual reality environment can be used to comprehend a program and effectively debug it. As a part of this work, we have opened up the field of future study and research in the development and debugging of software in virtual 3D environments.

It’s the known fact that an obstacle to software engineering education and training is a lack of fundamental knowledge about how programs are executed. The majority of the time, traditional methods of code debugging are challenging. But findings demonstrated that 3D environment tools might be developed to directly help in thorough comprehension of program.

During user research, it was discovered that the user had a favourable opinion of the application’s general user interface, navigational flow, and information visualization inside the prototypes. The participants emphasized the navigation as a strong point of the evaluated application’s present condition. The experience of moving through the code along a timeline greatly aids in understanding programs, and the addition of teleportation capabilities promotes quick movement across long ranges so that the application may be utilized even when there is just a small amount of physical area available. Furthermore, it was noted as beneficial for the users to highlight the present step in focus within the larger context.

On the other hand, it is acceptable that, the current model has a lot of room for improvement. Functionalities like drag-and-drop block repositioning, color-coding for various elements, highlighting important information to draw attention to content that is important, could all be added.

In summary, we have demonstrated that creating debugging tools in a virtual 3D environment is both feasible and advantageous. Powerful features might be introduced to improve the effectiveness of debugging and troubleshooting techniques.

Acknowledgment

For his profound suggestions on this project, we are grateful to Martin Hedlund. Additionally, we are grateful to Professor Gerrit Meixner for his unwavering assistance. We also want to express our appreciation to everyone who took part in the user study for testing of model

References

[1] Westbrook, L. (2006). Mental models: a theoretical overview and preliminary study. Journal of Information Science, 32(6), 563–579. https://doi.org /10.1177/0165551506068134

[2] Storey, M. A., Fracchia, F., & Muller, H. (1997). Cognitive design elements to support the construction of a mental model during software visualization. Proceedings Fifth International Workshop on Program Comprehension. IWPC’97. https://doi.org/10.1109/wpc.1997.601257

[3] K. Craik, The Nature of Explanation (Cambridge University Press, Cambridge, UK, 1943).

[4] P. Johnson-Laird, Mental models. In: M. Posner (ed.), Foundations of Cognitive Science (MIT Press, Cambridge, 1989), 469–499. MA

[5] P. Johnson-Laird, Mental Models: Towards a Cognitive Science of Language, Inference, and Consciousness(Harvard University Press, Cambridge, MA, 1983) 397.

[6] Hussain, Afzal & Shakeel, Haad & Hussain, Faizan & Uddin, Nasir & Ghouri, Turab. (2020). Unity Game Development Engine: A Technical Survey. University of Sindh Journal of Information and Communication Technology. 4.

Blog

Privacy Overview

Necessary cookies are absolutely essential for the website to function properly. This category only includes cookies that ensures basic functionalities and security features of the website. These cookies do not store any personal information.

Any cookies that may not be particularly necessary for the website to function and is used specifically to collect user personal data via analytics, ads, other embedded contents are termed as non-necessary cookies. It is mandatory to procure user consent prior to running these cookies on your website.